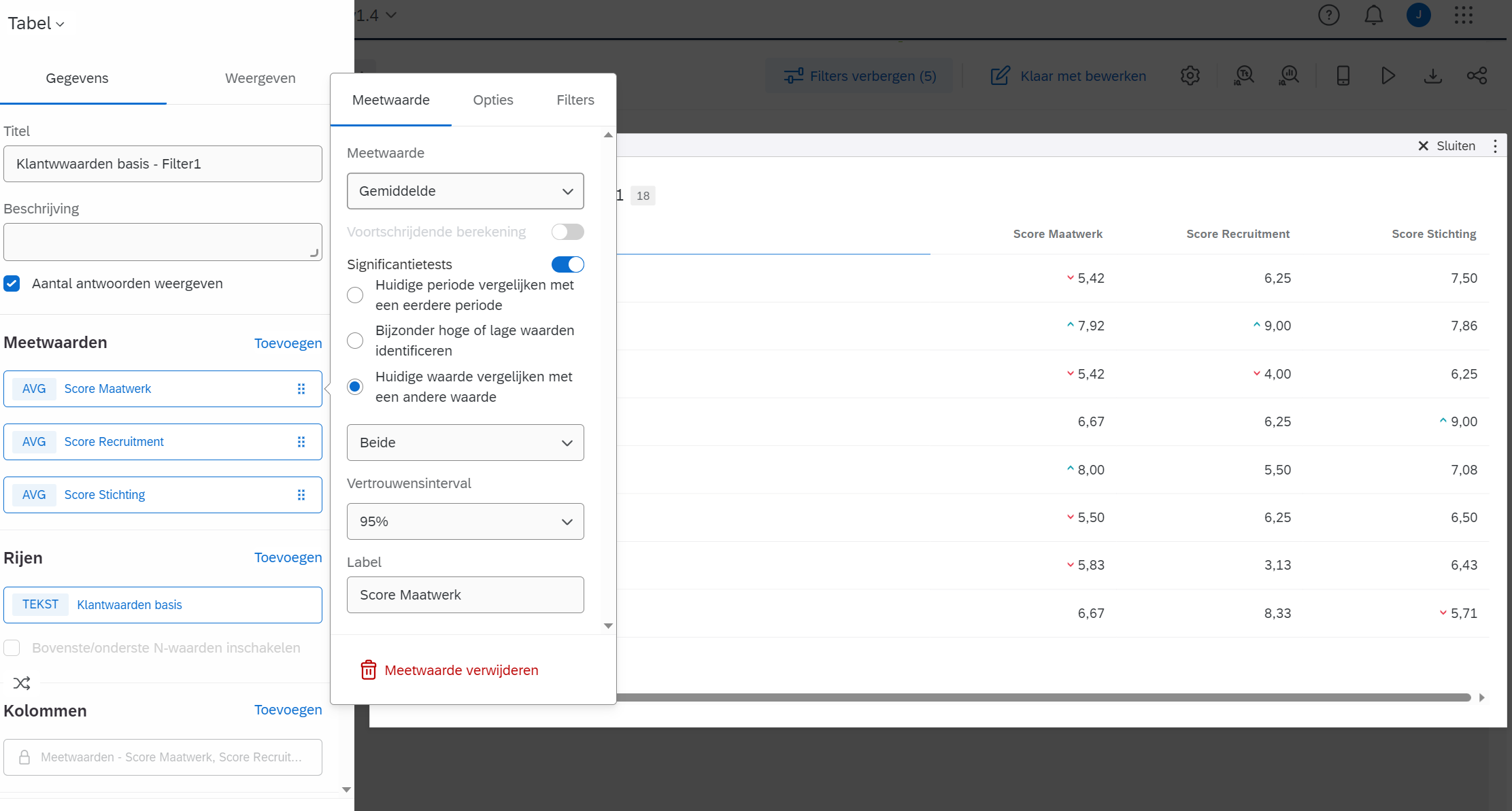

HI community! In a Table widget in a CX Dashboard, I want to use the Significance Testing option to compare the Compare current value to another value. However, the results looks strange.

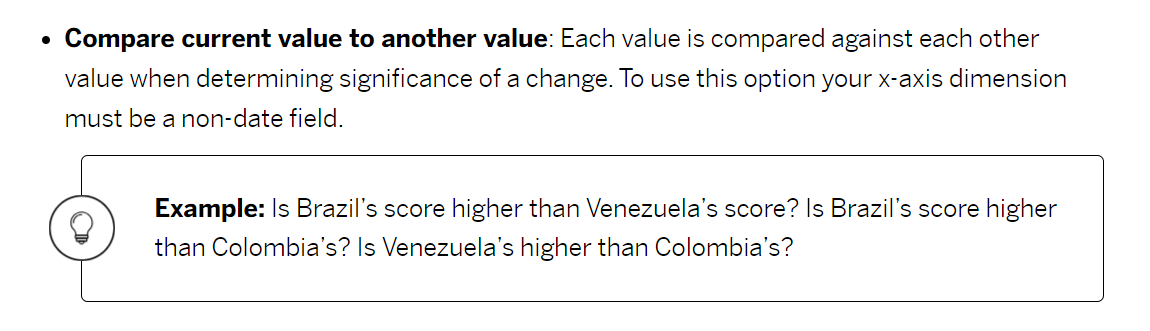

In the documentation, it states that each value is compared against each other value when determining significance of a change.

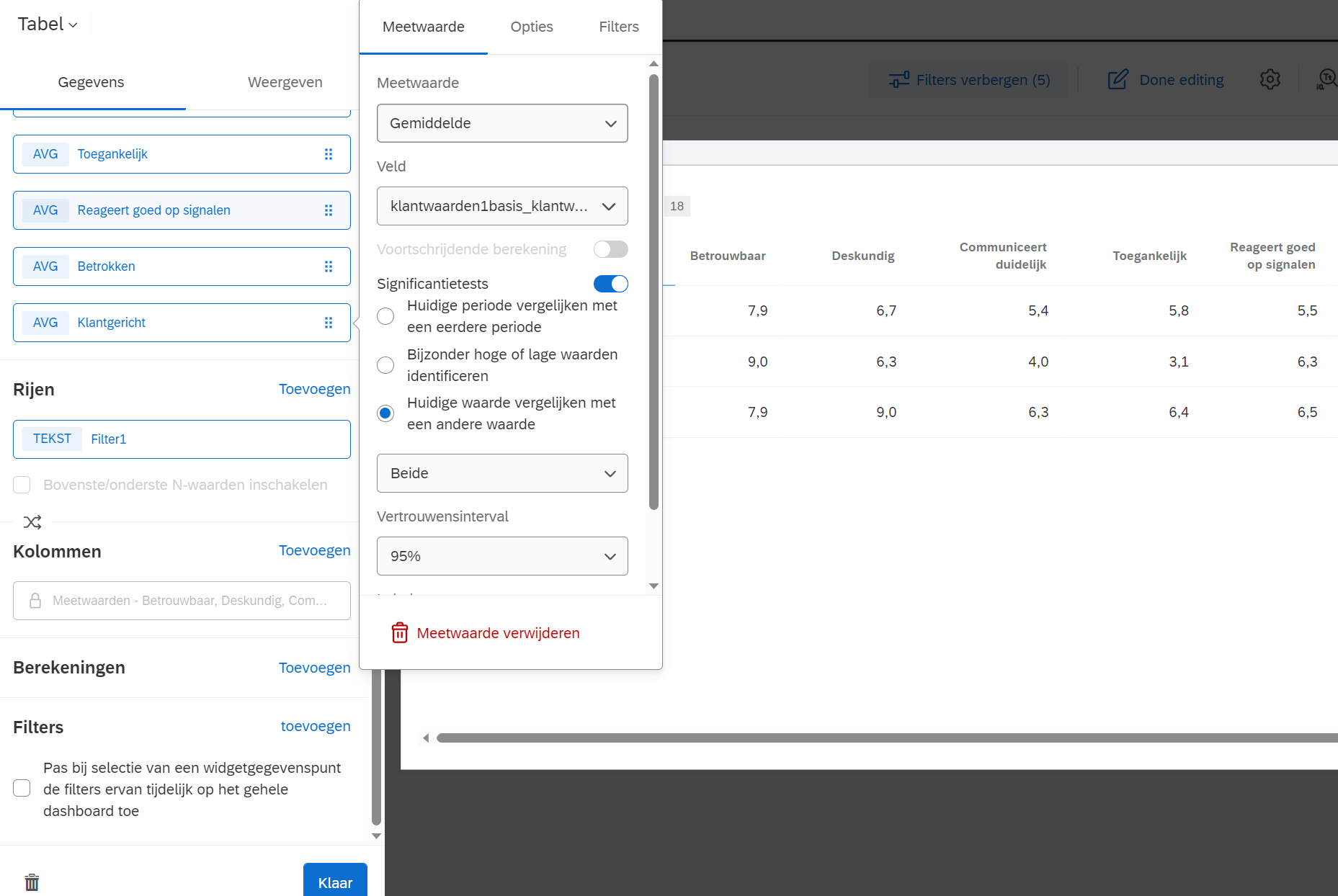

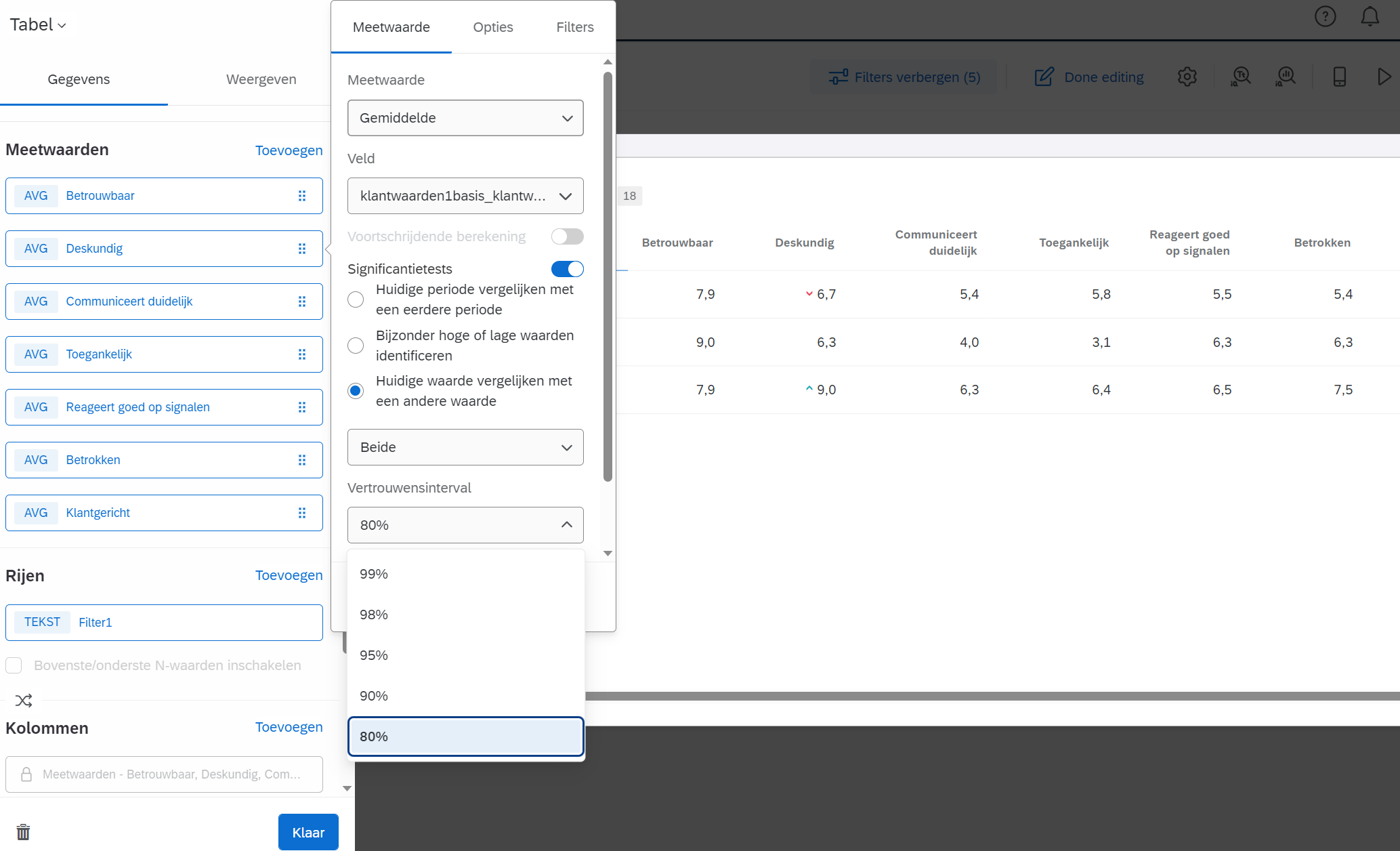

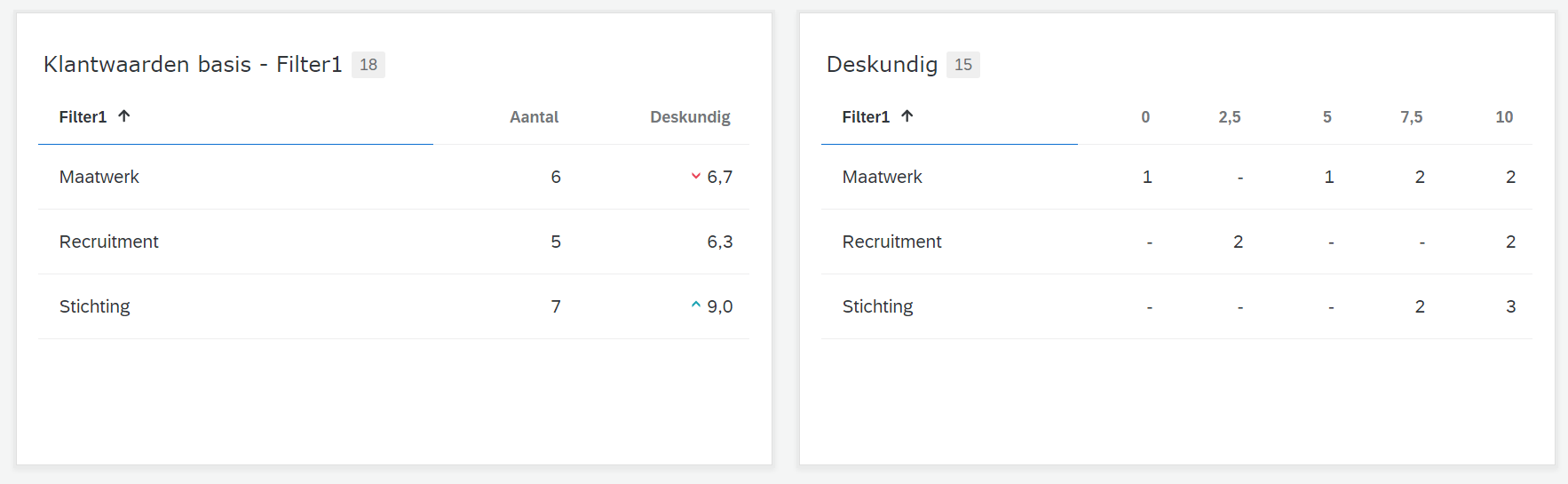

In my table, I have three filtered averages on a 10-point scale in the columns, and a group of variables in the rows.

As you can see, even though for all three columns I selected the same Significance Test, the results are not what one would expect. Why?