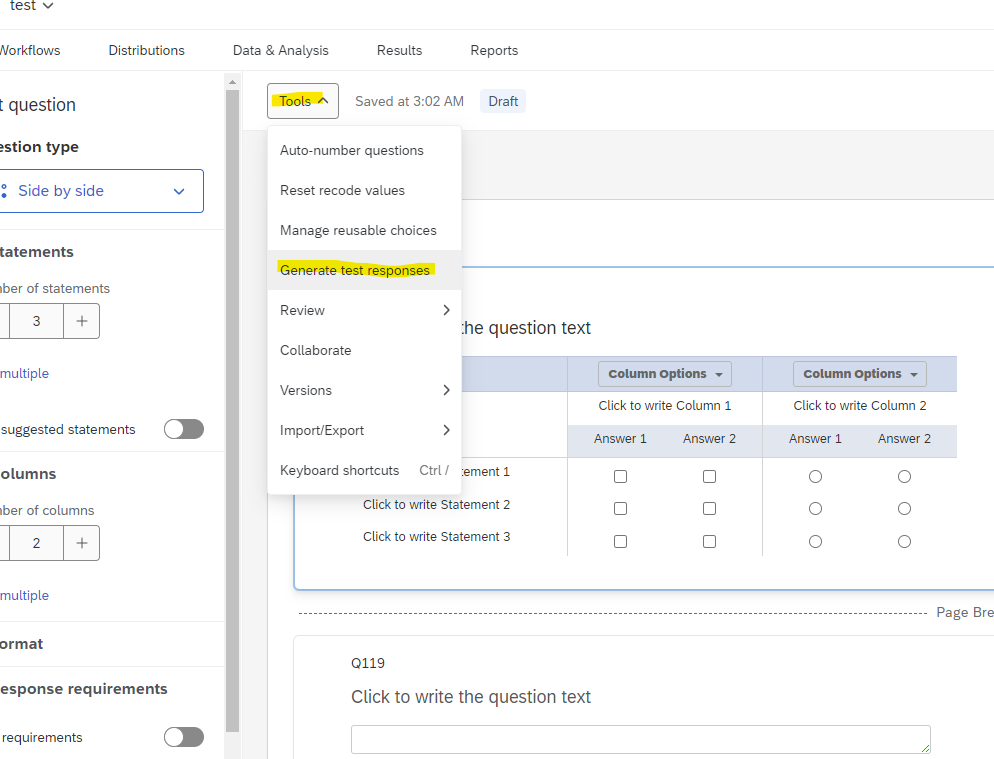

So I am the Qualtrics survey administrator foe my company, and lately I have seen some instances where I create a survey from scratch, get it all built out and get the logic flows in place where we need them, test it out on my end...and it all works as I built it. And they take a while to build as some of our surveys have upwards of 200+ lines of questions because the survey is built to capture a verbatim response to virtually every single response type there is to a question, thus creating hundreds of logic flow pathways. But I get it working as designed when I test...

But when others go to test it...some questions they DON’T get the desires responses or settings. Some questions that are marked “required” don’t force a reply, some questions marked “allow multiple” only take a single answer, and many more instances.

This is giving my managers and higher ups the impression that I’m not testing things out on my end when in fact I am spending hours or days testing! Has anyone else experienced this happening to them?

Settings in survey do not save/display correctly

Sign up

Already have an account? Login

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join.

No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Login with Qualtrics

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join. No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Login to the Community

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join.

No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Login with Qualtrics

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join. No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.