This post discusses my experience with the natural language processing component of Qualtrics (Text iQ) (https://www.qualtrics.com/iq/text-iq/). This software is used to analyze open-text comments from surveys or other qualitative data.

Part II is here - https://community.qualtrics.com/XMcommunity/discussion/18013/text-iq-sucks-but-we-love-qualtrics-part-ii/p1?new=1

Teaser

We love everything about Qualtrics, except their natural language processor (Text iQ)

Part I – My take

Overall, we love Qualtrics

I can’t say enough about Qualtrics, other then Text iQ. Their support is fantastic, and they have tons of training resources which are very well organized and thorough. Here’s one example on relating data for statistical purposes - https://www.qualtrics.com/support/stats-iq/analyses/relate-data/

It's been excellent for building surveys and doing various statistical analysis. Just not for Text iQ.

The Bottom Line – I find the model cumbersome, even at scale

After wrestling with Text iQ for 2 years, I find the model cumbersome and the output less than satisfying, and the end result still requires careful inspection of all comments.

I feel that the actual model is too computer’y and not able to discern thoughts – it’s basically a dictionary exercise to look at synonyms, and it cannot accurately summarize thought patterns. Pumping up the “dictionary” is not the answer in my opinion.

In addition, any AI system has difficulty with misspellings, such as comeradery, which I can nistantly recognize as camaraderie, plus the software often trips up on double negatives, e.g., “This was not the most awesome semester I’ve had”. And it surely can’t understand sarcasm and satire (which humans also have a hard time with [especially my wife …])

Although the appeal of Text iQ is that it can read massive amounts of comments better than a human, it has turned out to be a less than satisfactory model for us. So even with massive amounts of comments, a bad model is still a bad model.

With Text iQ, it would take approximately one day per comments question for me to properly categorize everything, but I would still tell the sponsor they needed to review every comment for best assessment.

For our environment, I am now recommending full manual review and categorization of comments.

Text iQ probably works in other environments, just not for us.

Our environment

I work at a 4-year college, so our population groups are college students, faculty, and staff. Student population is around 4,500. There are various surveys throughout the year, ranging from academic preferences to summer activities to pre-graduation assessments of the institution.

The number of comments we deal with is relatively small – less than 1,000 for most surveys (for 5 or so questions), and the upper limit is around 2,000 comments. Comments can be short and sweet or perhaps 5 sentences or more.

My experience that leads me to the conclusion that it’s better to review comments manually

I stumbled upon three factors that were light-bulb moments for me. Once I realized these factors, I plowed / zipped through a key survey with around 750 comments, and I felt the end result was extremely helpful, and which was 10,000x better than Text iQ. Our manual solution was one that anyone could consume (and that many people SHOULD consume). Contrast that with the end result of Text iQ, which for us is always “here are some topics and their sentiment (and some bar charts), but you still have to read the comments carefully.”

1. Sorting the comments alphabetically does wonders for the analysis – you can see thought patterns

The first factor that swayed me was plumb luck. Because I had loaded up the comments to Business Objects (and then downloaded to Excel), the comments were sorted alphabetically. And this was an eye-opener – I could see patterns in the comments, something I don’t think the computer can do, since it’s just looking at individual comments and comparing them to its giant dictionary.

I then realized that I could make this pattern search even better by removing first words such as “The ”, “My ”, etc.) from the beginning of the comment. Of course, the computer is also going to ignore these words, but by then re-sorting, the patterns emerged even more, e.g.,

- My friends … vs. friends …

- Leadership was disappointing vs. The leadership team was outstanding

2. The computer can’t think and so is rudimentary at categorizing comments

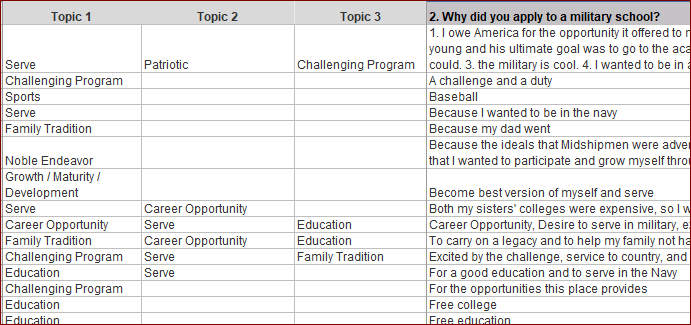

The second factor was that I categorized comments in ways that the user logically inferred but did not explicitly state, the prime example being to categorize these comments as “Noble Endeavor” -

- It's something worth doing. I have purpose in uniform, and

- It gives me something higher to reach for

I doubt the computer would choose “Noble Endeavor” for these comments. Plus I’ve seen Qualtrics Text iQ in action enough to know it’s only going to pick up exact words from the comment as the “topic”.

3. The computer doesn’t know jargon, acronyms, and trips up on misspellings

The final factor was recognizing that the computer doesn’t know jargon, acronyms, nor our users. Plus any AI system has difficulty with misspellings, as I mentioned above (e.g., comeradery). Since I understood the perspective of the respondents (their language and nuances), I could assess their comment rather quickly. I also realized full well that Qualtrics could not produce the same thing. I didn’t even take the time to test my theory – I have already spent 2 years proving it.

Here’s a third example – I took a simple comment of “experiences and people I met” and created two categories:

- Relationships / Community

- Unique Opportunities

And I placed many other comments into those two categories.

Here is the template I used. The comments were sorted alphabetically, and thought patterns emerged. And after adding the categories (topics), you can see patterns there as well.

Qualtrics won’t work even if I review every comment and create topics on the fly

After writing most of this article, I thought I’d give Text iQ a try again. But it’s still a no-go for me.

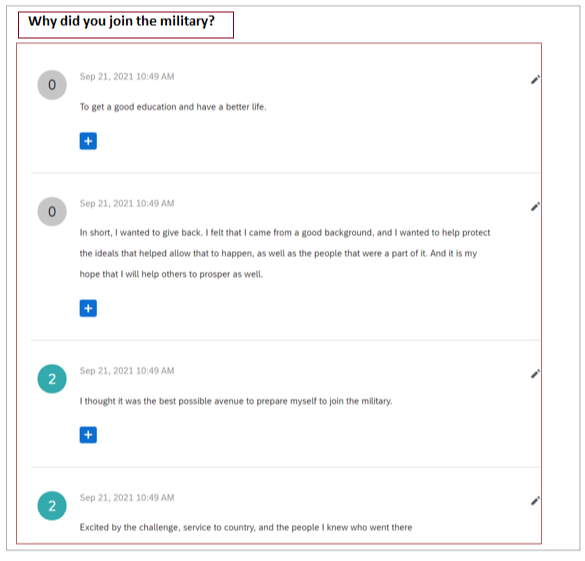

In Text iQ, you can create multiple topics for one comment on the fly. This is exactly the model that I use in Excel, but it won’t work in Text iQ, for two reasons: a) you can’t sort the comments alphabetically, and b) you can only see a couple of comments at a time, whereas in Excel you can see the patterns 1,000x better.

Here’s the Qualtrics view. It’s too hard to wrestle with (and too much white space), while Excel is much easier (see screen shot just above).

continued in Part 2

Text iQ is pretty lame, but other than that, Qualtrics is great (Part 1)

Best answer by AmaraW

Hi @WilliamPeckUSNA! If you haven't yet already, we’d recommend posting this in our Product Ideas category, a home for feature requests. If you’re not sure how to post your idea, check out our Ideation Guidelines and our Policy & Procedures.

Sign up

Already have an account? Login

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join.

No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Login with Qualtrics

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join. No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Login to the Community

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join.

No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Login with Qualtrics

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join. No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.