Hi everyone,

this will be a bit lengthy and I have been unable to find a similar question previously hence creating a fresh post.

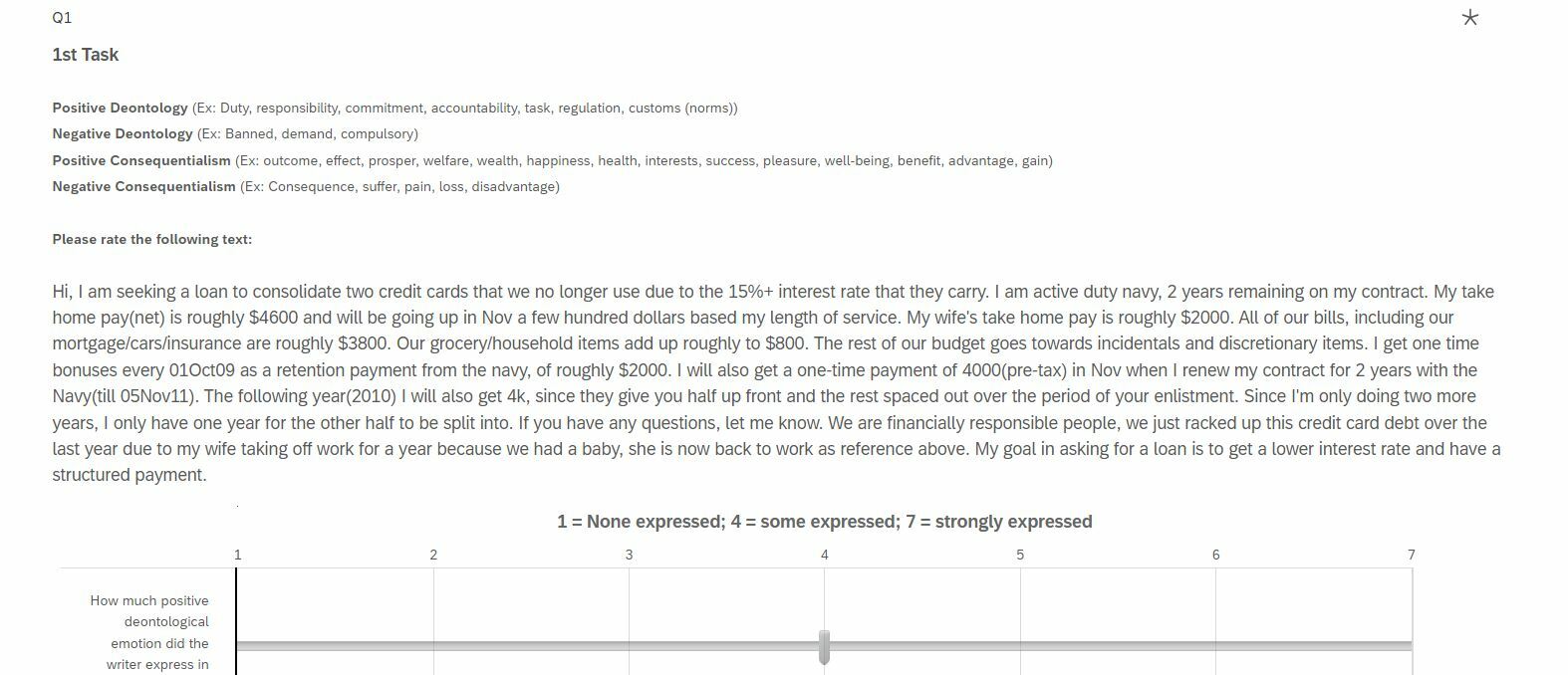

My research lab plans to undertake an online survey using Prolific.com (UK version of AMturk) to undertake an English textual coding task. What we want to do is create a survey whereby the participants read a passage of textual language and then rate this language based on given criteria, pretty simple. I have attached an image of an example question we recently used in a pre-test survey. Here is my dilemma and where I need some help:

Here is my dilemma and where I need some help:

Context:

1) We want to rate 5000 different textual documents, which we have decided will require 200 people to do 25 each. We also need to statistically control for quality whereby everyone sees the same question for say 5 documents.

2) For the same 5 documents we can make a separate block and present it first in the in Qualtrics's flow.

3) However, for the 5000 documents, we need to split them into blocks of 25 (1 person) and then randomly assign them to each person. This requires the randomization feature with even distribution. No problem.

Problem:

1) However, as this is an online survey suing people we cannot personally control, controlling for quality requires screening questions, attention questions and comprehension questions. If these are failed by the participants, they are removed from the survey. At this time, as far as I know, Qualtrics assumers this block is in progress and therefore will put the next person who will enter the survey onto a fresh block.

2) As stated we need 200 people, let's assume of the first 50, 5 people fail and are removed from our survey. These 5 blocks assigned for these people are (as far as I understand) stuck in limbo "response in progress" until completed. However, they will never be completed as the participant was removed. If I am correct, the people who finish the survey will open up this same block for the next participant. Worst case scenario, say 25 people fail, once the first 200 blocks are assigned, I believe it will rotate onto new blocks where previous participants have finished meaning we will get duplications of the same documents being rated.

Solution?:

1) Is there any way to combat against this issue? One person and one block is both timely and expensive, and therefore we do not want the same blocks being redone.

Any ideas or help would be greatly appreciated. Thanks.

Controlling for even distribution of randomization knowing people will quit/not complete the survey

Best answer by wpm24

TL;DR: Yes, there are some things you can do, but your issue is compounded by having to pay prolific for participants for successful completion of the survey - and how you define 'success' will influence your strategy.

As someone who also is required to use prolific rather than Mturk, one of the best things about it is data quality. Participants generally are more attentive and actually engage in the survey rather than just click through fast for money. That being said, there isn't much (more) that you can do (other than what you are already doing) in regard to preserving data quality.

You have a compounded issue. First, you have the number of participants you pay prolific for/inclusion criteria at the prolific level, then quality control issue at the qualtrics level. For example, let's say for your advertisement on prolific and desired sample pool, you make it so only men and women older than 22 and younger than 45 can participate. If you make that the only criteria, then any person that participates in your survey on qualtrics should technically still be paid and counted to your required 200 sample size that you paid prolific for, even if they fail your quality control measures. Prolific does allow you to refuse payment to a participant, but you must have a good reason. Prolific isn't strict on what a good reason is, but you would have to provide compelling evidence other than just 'they failed an attention check'. So, keep that in mind going forward. I always over aim by about 10% of the required sample size for this reason.

For the actual survey I have some follow up questions/suggestions.

1) Can your quality control questions come before randomly assigning participants to one of the blocks with 25 texts? If so, you can have qualtrics end their survey if they fail one of these questions and send them back to prolific with or without their completion code (this would be done with simple if/then branching logic where the 'then' is to end survey with a special message about why they are being terminated early and/or if they will get their completion code. This would be a personal choice. If they are kicked out BEFORE the randomization block, they will not be counted in quota for even distribution. If they return without their completion code, prolific will keep searching for participants until 200 participants return with their completion code, indicating successfully completing the survey. However, you may get angry messages from participants if you kick them out without a completion code, even with an end of survey message explaining why.

2) if the quality control measures come AFTER the randomization block, and thus participants have been included in the quota data then what you may desire to do (and what I would recommend from personal experience) is run the analysis with and without those participants that fail the quality control measures and report any differences in the manuscript that you write up. I would chose this option as quality control measures are not that well understood, especially in regard to professional survey takers.

I'm happy to discuss this more, but what you describes sounds more like a data quality issue than a qualtrics specific issue.

Sign up

Already have an account? Login

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join.

No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Login with Qualtrics

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join. No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Login to the Community

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join.

No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Login with Qualtrics

Welcome! To join the Qualtrics Experience Community, log in with your existing Qualtrics credentials below.

Confirm your username, share a bit about yourself, Once your account has been approved by our admins then you're ready to explore and connect .

Free trial account? No problem. Log in with your trial credentials to join. No free trial account? No problem! Register here

Already a member? Hi and welcome back! We're glad you're here 🙂

You will see the Qualtrics login page briefly before being taken to the Experience Community

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.