Hello All,

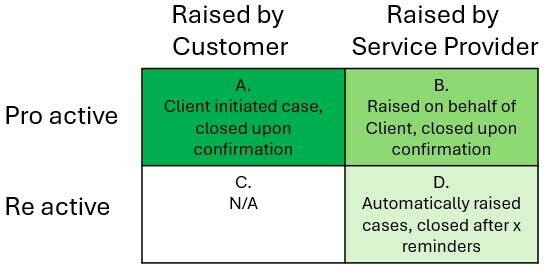

I manage our Support Cases Satisfaction Survey’s that are sent out after a case is closed. We are running into a challenging question around whether we should still send a survey for a proactive case or an auto-closed case.

- Proactive cases are opened on behalf of a customer based on a trigger within their own product usage. This type of case warns them of risk within their system.

- Auto-closed cases are closed when a customer has not responded over a certain period of time.

These types of cases are hurting our response rates and on occasion our CSAT scores. How do you handle this type of audience within your organization? Why did your organization make the decision to send or not to these audiences?