This month we will be highlighting course evaluations, read below to see a few helpful tips to set up your course evaluations program:

- Build your Course Evaluations

- You can quickly build course evaluations from a bank of expert-designed questions within Qualtrics (Reach out to your account rep if you need help here)

- Utilize the template course evaluation questions to build internal benchmarks

- Add additional questions specific to the course, instructor, department, etc.

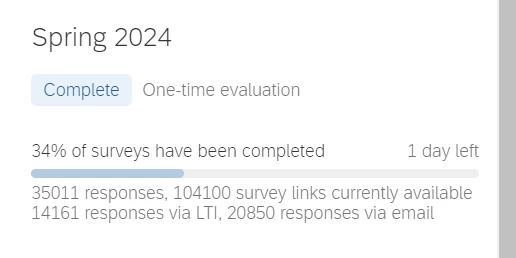

- Launch your program

- Drive response rates by enabling students with a single course evaluation experience for multiple courses and instructors.

- Consider integrating with learning management systems via LTI integration.

- Set up feedback reminders for students

- Take Action on your own Insights

- Set up reporting dashboards (they are mobile-optimized, user-friendly, and easy to navigate)

- Set up role-based permissions allowing you to enable each user to view the results relevant to them. For example, a professor would only be able to view feedback related to their courses, while the chair of the department can view data for the entire department or filter down by instructor, course, semester, year, division, etc.

How is your institution currently using Qualtrics to improve your courses? What advice would you give to other institutions when setting up their course evaluations?

Click here to view a course evaluation QSF.